The Science™ Did a 'Scoping Review' of 'Health Conspiracy Theories'

Courtesy of Kisa & Kisa 2025, we finally know how the Science™ works, replete with misinformation in the accompanying Kristiania University College's press release (oh, the irony)

There’s a new highly absurd, if borderline amusing, paper in Science Town™ that I think you should know about. Entitled ‘Health conspiracy theories: a scoping review of drivers, impacts, and countermeasures’, it was co-authored by Adnan Kisa & Sezer Kisa, and the study just came out in the International Journal for Equity in Health vol. 24, Article no. 93 (2025). Since it’s Open Access and thus freely available on the internet, I’ll delimit myself to a few choice quotes from the Kristiania University of Applied Sciences’s accompanying press release (15 May 2025) and the above-related paper.

You’re in for a wild ride on this one, that much I will divulge right away.

The paper is in English, and I’ve translated the press release for your enjoyment on this Pentecost weekend, with emphases and [snark] added.

A Summary of Kisa & Kisa (2025)

Background

Health-related conspiracy theories undermine trust in healthcare, exacerbate health inequities [don’t gloss over that term, for it’s a key wedge—and here’s WHO’s definition: ‘Health inequities are differences in health status or in the distribution of health resources between different population groups, arising from the social conditions in which people are born, grow, live, work and age. Health inequities are unfair and could be reduced by the right mix of government policies.’—], and contribute to harmful health behaviors such as vaccine hesitancy and reliance on unproven treatments [now you know what this piece is about: a call to further increase the role of gov’t in healthcare (sic)]. These theories disproportionately impact marginalized populations, further widening health disparities [‘health inequity’ is nothing but a set of ideological convictions that seek to shape societal relations, hence it must depart from the claim that some are more affected than others; who are these ‘marginalized populations’, you might ask?—Well, the Nat’l Library of Medicine has another, broader definition that is fully woke-fied nonsense (but nonetheless underwrites the paper): ‘Structural inequities refers to the systemic disadvantage of one social group compared to other groups with whom they coexist, and the term encompasses policy, law, governance, and culture and refers to race, ethnicity, gender or gender identity, class, sexual orientation, and other domains. The social determinants of health are the conditions in the environments in which people live, learn, work, play, worship, and age that affect a wide range of health, functioning, and quality-of-life outcomes and risks. For the purposes of this report, the social determinants of health are: education; employment; health systems and services; housing; income and wealth; the physical environment; public safety; the social environment; and transportation.’]. Their rapid spread, amplified by social media algorithms and digital misinformation networks, exacerbates public health challenges, highlighting the urgency of understanding their prevalence, key drivers, and mitigation strategies [so, to cut through this nonsense, the key issue aren’t these so-called ‘social determinants of health’, actually, but the ‘rapid spread’ of so-called ‘conspiracy theories’: with the prelims settled, let’s look for the main course here, shall we?].

Methods

This scoping review synthesizes research on health-related conspiracy theories, focusing on their prevalence, impacts on health behaviors and outcomes, contributing factors, and counter-measures [oh, would you look at all these fancy, science-ey terms that suggest to the casual reader that these are EXPERTS™ wielding such terminology]. Using Arksey and O’Malley’s framework [link added; that paper notes that, unlike systematic reviews, ‘a scoping study tends to address broader topics where many different study designs might be applicable’ and thus ‘is less likely to seek to address very specific research questions nor, consequently, to assess the quality of included studies’—in short: a scoping study is a bit like a stream of consciousness, so to speak; for an update on how these things are done™, see this piece] and the Joanna Briggs Institute guidelines [whose website features a ‘template for scoping reviews’, no less], a systematic search of six databases (PubMed, Embase, Web of Science, CINAHL, PsycINFO, and Scopus) was conducted. Studies were screened using predefined inclusion and exclusion criteria, with thematic synthesis categorizing findings across diverse health contexts [so, it’s a bit of mix and match approach to whatever: no need for a pre-defined research question, a hypothesis, or focus on one or the other topic].

Results

The review revealed pervasive conspiracy beliefs surrounding HIV/AIDS, vaccines, pharmaceutical companies, and COVID-19, linked to reduced vaccine uptake, increased mistrust in health authorities, and negative mental health outcomes such as anxiety and depression. Key drivers included sociopolitical distrust, cognitive biases, low scientific literacy, and the unchecked proliferation of misinformation on digital platforms [in short and plain English: you naughty rabble, have you been dabbling in reading papers on your own?]. Promising countermeasures included inoculation [sic] messaging, media literacy interventions, and two-sided refutational techniques. However, their long-term effectiveness remains uncertain, as few studies assess their sustained impact across diverse sociopolitical contexts.

Conclusion

Health-related conspiracy theories present a growing public health challenge that undermines global health equity [those who ‘do their own research’ are apparently opting out of WHO-directed healthcare™, which cuts into Big Pharma’s business model—plus the authors get to virtue-signal like true experts™: a win-win]. While several interventions show potential, further research [grifters] is needed to evaluate their effectiveness across diverse populations and contexts. Targeted efforts to rebuild trust in healthcare systems and strengthen critical health literacy are essential to mitigate the harmful effects of these conspiracy beliefs.

Are you entertained yet?

Here’s a bunch more prose from the paper for good measure:

Our investigation is informed by a critical analysis of existing literature, noting a gap in studies that collectively examine the themes, sources, target audiences, impacts, interventions, and effectiveness of public health communication strategies against COVID-19 misinformation…structured around four pertinent questions…

What is the extent of COVID-19 misinformation? How can it be addressed?

What are the primary sources of COVID-19 misinformation?

Which target audiences are most affected by COVID-19 misinformation?

What public health communication strategies are being used to combat COVID-19 misinformation?

Well enough, but a bit much for a short paper; care to learn about their methods?

This scoping review was conducted following the methodology framework defined by Arksey and O’Malley [16] and elaborated upon by Levac et al [17]. This framework, recognized for its systematic approach, involves five stages: (1) defining the research question; (2) identifying relevant studies; (3) selecting appropriate literature; (4) charting the data; and (5) collating, summarizing, and reporting the results.

The literature search targeted 3 [in the abstract cited above, the authors claimed they searched 6 such databases] major databases: MEDLINE (PubMed), Embase, and Scopus. These databases were selected for their comprehensive coverage of medical, health, and social science literature. The search strategy [sic] was crafted using a combination of keywords and subject headings related to COVID-19 [at that point, we can be reasonably assured that the authors didn’t read most of the papers], misinformation, and public health communication. We used (“COVID-19” OR “SARS-CoV-2” OR “Coronavirus”) AND (“Misinformation” OR “Disinformation” OR “Fake news” OR “Infodemic”) AND (“Public health outcomes” OR “Health impacts”) AND (“Communication strategies” OR “Public health communication”).

Here are the paper’s inclusion/exclusion criteria:

Would you look at that: ‘case studies’ and ‘anecdotal reports’ were excluded—which is precisely the way new adverse events (that are, of course, always ‘singular occurrences’) are making their way to the wider field, e.g.,

Of course, doing so also excludes, say, case reports by pathologists and funeral home directors, such as the one featured below:

This is what was done by Kisa & Kisa:

The study selection process involved an initial screening of titles [whatever happened to ‘don’t judge a book by its cover’?] and abstracts to eliminate irrelevant studies, followed by a thorough full-text review of the remaining articles. This critical stage was conducted by the authors, each with expertise in public health communication and health services research [lol, really?], thereby enhancing the thoroughness and reliability of the selection process. In cases of disagreement, the reviewers engaged in discussions until a consensus was reached on the inclusion of each article. In addition, we adhered to the PRISMA-ScR (Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews) guidelines [18] to enhance the thoroughness and transparency of our review (see Multimedia Appendix 1 for the PRISMA-ScR checklist).

It get’s worse the more one reads:

A total of 390 articles were identified from the 3 databases, of which, after removing 134 (34.4%) duplicates, 256 (65.6%) articles remained. Of these 256 articles, 69 (27%) were selected based on abstract searches. Of the 69 full-text articles, 27 (39%) were assessed for eligibility. Of these 27 studies, 21 (78%) were included in the scoping review (Figure 1).

Get that: of the 256 (I’m being generous here), a measly 8 per cent (21) papers were actually included in the review.

This analysis of the 21 studies provides a comprehensive overview of the many impacts of misinformation during the COVID-19 pandemic, including its characteristics, themes, sources, effects, and public health communication strategies.

I’m sure™ of that; care to learn what kind of topics the intrepid researchers™ excluded? Here is their graphical overview (with emphases added):

So, basically, the authors excluded 48 papers discussing ‘vaccine hesitancy’ (sic), ‘vaccination challenges’, and several other relevant aspects, incl. substance abuse, mental effects of social distancing, the shit gov’t ordered young people to go through, and whatever absurdity falls into ‘specific health impacts’ (my guess would be: vaccine injuries).

Here’s how the results of the included papers varied (according to Kisa & Kisa):

The included studies exhibited considerable diversity in terms of their methodologies, geographic focus, and objectives (Table 1). Verma et al [15] conducted a large-scale observational study in the United States, analyzing social media data from >76,000 users of Twitter (subsequently rebranded X) to establish a causal link between misinformation sharing and increased anxiety. By contrast, Loomba et al [11] carried out a randomized controlled trial in both the United Kingdom and the United States to examine the impact of misinformation on COVID-19 vaccination intent across different sociodemographic groups. In the United States, Bokemper et al [22] used randomized trials to assess the efficacy of various public health messages in promoting social distancing. Xue et al [23] used observational methods to explore public attitudes toward COVID-19 vaccines and the role of fact-checking information on social media.

There’s also a listing of all the 21 studies that made the cut, and I’ll merely cite two more paragraphs of the authors’ findings to round off discussion of this masterpiece:

The spread of misinformation resulted in decreased public trust in science [14], undermining the effectiveness of public health messaging [22] and leading to increased vaccine hesitancy [27,29,31,32]. This hesitancy was further exacerbated by the promotion of antivaccine propaganda, posing a barrier to achieving herd immunity [30]. The extent of the impact of misinformation was also evident in the public’s mental health, with reports of increased anxiety, suicidal thoughts, and distress [2], as well as in overall public attitudes toward the pandemic [26] and changes in public attitudes toward vaccines, which became increasingly negative over time [23].

Get that: at no point do Kisa & Kisa recognise, let alone consider, the fact that public health/science was totally wrong in promoting these injectable products as preventing transmission (one big, fat, stinkin’ lie) or infection. I mean, if whatever these poison/death juices do, they cannot induce herd immunity.

Guess what: once broken, trust is hard, if not impossible, to restore (just ask couples where one of the partners cheated), esp. if something concerns bodily autonomy and integrity, as well as the gov’t mandating medical interventions with untested gene therapy products, such as the modRNA poison/death juices of BioNTech/Pfizer and Moderna.

Measured Outcomes

The studies highlighted the challenges that individuals and communities faced in navigating the pandemic amid a flood of misinformation (Table 2). It was reported that misinformation significantly impacted health care professionals, leading to discomfort, distraction, and difficulty in discerning accurate information [oh, please spare a crocodile tear here—for I sense that Kisa & Kisa kinda sense that a reckoning is coming]. This impact affected decision-making and routine practices [24]. The public’s response was manifested by changes in search behaviors and purchasing patterns, reflecting the influence of rumors and celebrity endorsements [10]. It was reported that “fake news” significantly affected the information landscape, skewing the perception of truth versus lies [3] [would that include the lies about the poison/death juices preventing transmission and/or infection?]. Hesitancy was reported in intent to receive COVID-19 vaccines across demographic groups [11,27,31]. The misinformation also altered health behaviors, such as handwashing and the use of disinfectants, and influenced preventive behavioral intentions [4,14]. It was also reported that misinformation affected public adherence to COVID-19 prevention, risk avoidance behaviors, and vaccination intentions [25].

As regards the second half of these findings™, well, fuck yeah.

Here’s my long-form personal account of travelling in 2020/21:

How The Science™ Communicates These Findings

Now, we’ll shift gears somewhat and look at the accompanying press release (15 May 2025) by Kristiania University of Applied Sciences:

New study: Conspiracy theories threaten public health…

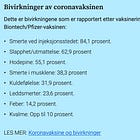

Professors Adnan and Sezer Kisa [remember their methods section? ‘In cases of disagreement, the reviewers engaged in discussions until a consensus was reached’, which means that husband and wife would dicuss™ matters ‘until consensus was reached’: that’s what apparently passes for ‘good scientific practices’ these days—you can’t make this up], associated with Høyskolen Kristiania and OsloMet respectively, reviewed 1792 studies globally and analyzed 25 studies [note that the press release is spreading misinformation here: the authors themselves wrote they selected 390 or 256 papers and discussed 21 in full] that met strict research criteria. They found that conspiracy theories not only weaken trust in vaccines and medical treatment, but also contribute to anxiety, depression and general mistrust—especially among people with lower education and fewer resources [apparently me, tenured faculty, counts among the rabble here: hi, dear colleagues (morons)].

‘Conspiracy stories thrive where trust is fragile and knowledge is unevenly distributed. It reinforces existing health differences’, says Sezer Kisa, professor at OsloMet [faculty profile].

Social media as fuel

The study highlights the central role social media plays in the spread of conspiracy theories. Algorithms prioritize emotional and sensational content—and misinformation often spreads faster than verified [sic] facts [let’s note, for completeness’ sake, that since Big Tech was doing this in cahoots and/or following Big Gov’t orders, that the Covid-19 shitshow apparently came with the secondary target of fracturing the public further: remember Avril Haynes’s ‘flood the zone’ comment during the infamous Event 201 exercise?].

‘During the Zika and COVID-19 outbreaks, rumors and misinformation were shared far more often than reliable health information’, says Adnan Kisa, professor at the School of Health Sciences at Høyskolen Kristiania [faculty profile].

And, of course, there is no reflection on part of those who went all-in on the Covid Mania:

The researchers point to several possible measures to counter conspiracy theories:

Preventive information campaigns that make people more resistant to misinformation [I’m all for it, you misinfo-spreaders]

Increased media literacy [which is the core aim of my little Substack]

Better scientific understanding [lol, sure]

‘There is no quick solution. In order to reduce the harmful effects, we must work long-term and purposefully to strengthen health skills and rebuild trust’, says Adnan Kisa.

Here’s a novel idea to restore trust: haul those dingbats who are responsible for the Covid shitshow in front of Grand Juries with full discovery in a public court of law.

Yet, as the example of Anthony Fauci and Frances Collins show, not even being ‘untruthful’ (their quote, not mine) under oath appears to be a problem here.

Bottom Lines

In lieu of more angry words levelled at these two researchers™ (however much they are warranted), I may as well direct you to the below-linked piece—a surprisingly badly-aged overview of ‘seven conspiracy theories about Covid vaccination’ from 2021, which I read back then and discussed in detail earlier this year:

You may as well wish to revisit my piece featuring prof. Jörn Klein, a professor of microbiology at the University of South-Eastern Norway (faculty profile), who recommended people took yet another booster in autumn 2024 (click on the link, which features a picture of him wearing what looks like a speedo in his face in summer 2020):

My pages are full with the receipts of such misinformation™ about Covid-19.

One day, assholes, you’ll be very unhappy that you peddled this kind of nonsense.

And when that day comes, no amount of obfuscation (‘we did what we did based on what we knew at the time’), deflection (‘look over there, we followed the Science™’), and equivocation (‘following guidelines is all we did’) will save their reputations.

Just check out the on-the-record emails I exchanged with Norway’s chief epidemiologist and vaccine™-pusher Preben Aavitsland:

This is me keeping score.

One day, before too long, I hope, your smug obfuscation will be your downfall.

You’re welcome, assholes.

Under no circumstance will “the science” ever be relevant again. 99% will not be taking the mRNA vaccines ever again. If it has 3 or 4 letters and lives inside the beltway ( you know which beltway, it’s now irrelevant to modern society. The one think I’m sure of though, the 1% taking mRNA? 100% democrats.

And golly thank you for keeping the score it’s much needed!!